Many testing and software projects like to define quality metrics for capturing and quantifying “quality” to the customer. Quality is value and value is usually multi-dimensional. For one context, quality might be speed and for the other, it might be security. Quality varies with the context and we would expect the metrics to do as well. Here is a definition of quality by Jerry Weinberg:

Quality is value to someone who matters

Gerald M. Weinberg

However, a common pitfall that most teams fall into is introducing proxy or surrogate metrics instead of defining metrics that help the project.

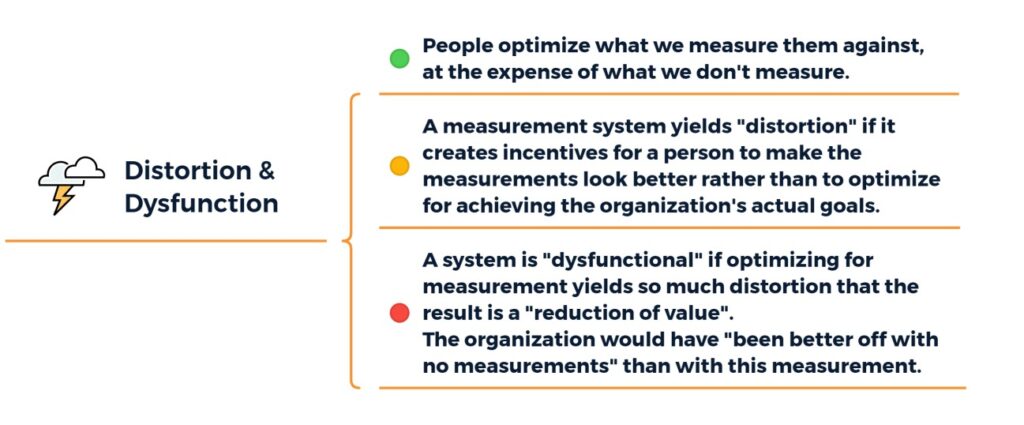

The negative consequence of such metrics can derail the project direction due to “Distortion” and “Dysfunction“.

The above screenshots are a summary of Dr. Cem Kaner’s lecture on Measurement in Software Testing.

Typical metrics that can create distortion and dysfunction are:

- Defect Containment = Total internal defects Verses Production defects (per month) – Can incentivize teams to raise more internal defects that are even meaningless or could have been clubbed under one bug.

- Defects Identified per person – Can incentivize testers to raise and debate for low priority or trivial defects.

- Test Cases Reviewed – Can incentivize testers to get more tests approved in review instead of focusing on actual testing tasks. Can also trigger the fake test case economy.

- New TC Suggestion – Can incentivize testers to create small and repetitive test cases or multiple test cases for cases where details could have been clubbed under one or minimal tests.

- Scenario Automation – Can incentivize testers to keep their focus on writing scripts and checks even for areas that may be fragile or not suitable for automation. Sometimes, it can even change the priority of testers from testing to writing automation checks.

These kinds of metrics will eventually lead to a decrease in quality or a shift from the quality mindset

Does that mean, all metrics are wrong? No.

In my experience, every team needs to identify what are they trying to measure and what can be the potential distortions/dysfunctions that can come from measuring that. In some cases, you will also find that certain metrics may help you get closer to the actual business and quality goals.

Coverage is a good metric in testing. However, when I say coverage, I just don’t mean code coverage or automation coverage. Coverage could be anything like:

- Structural Coverage

- Functional Coverage

- Data Coverage

- Interface Coverage

- Platform Coverage

- Operations Coverage

- Timing Related Coverages

Automation Coverage / Progress % is also a decent enough metric once the team is in regression scripting mode.

Decreasing production issues – Open bugs reported in production should be decreasing in general if the testing coverage gets better with time.

However, one of my fav metrics is Customer Feedback.

Once any project kicks off, the quality should increase and the customers (product owners in most cases) should notice it and should be asked for feedback on how the product responds to their expectations. This is a golden rule and it also serves as a constant reminder that the product must respond to the customer’s expectations and how efficiently any issues are resolved.

If you have any other metric ideas that you feel helped you and your team, do share them in the comments. I am happy to discuss.

Review Credits: Andy Hird

Enjoyed this post? Here’s what you can do next:

- 📢 Share this post | Twitter | LinkedIn | WhatsApp

- 📚 Checkout my free Gen AI for Testers Course

- 📖 Buy a copy of Ultimate Productivity Toolkit

- 🎓 Book a call with me on Topmate

Thank you for reading! 😊